Developing a mixed reality computational workflow combining 3D depth sensing and virtual reality (VR) to enable iterative user-centered design.

Developing a mixed reality computational workflow combining 3D depth sensing and virtual reality (VR) to enable iterative user-centered design.

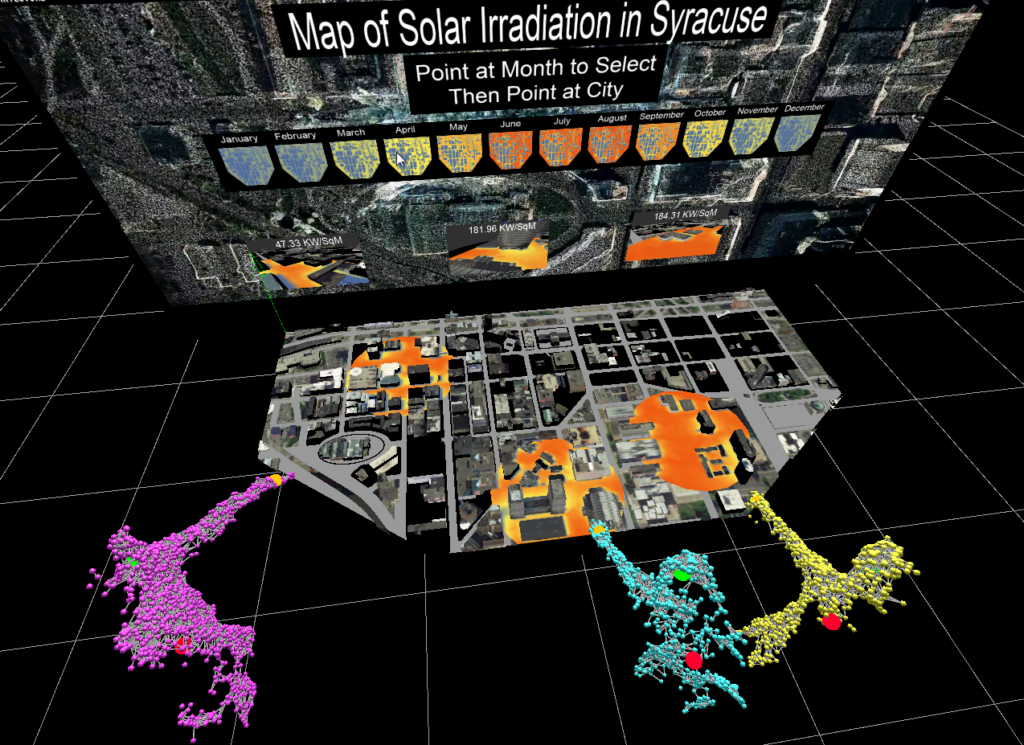

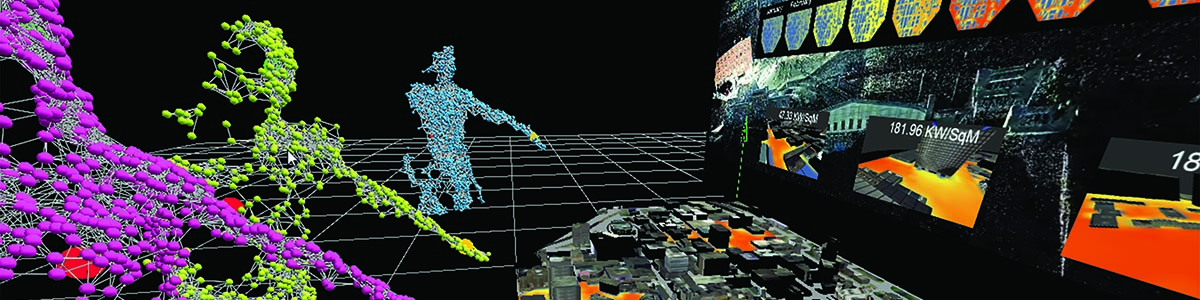

Using an interactive museum installation as a case study, user pointcloud data is observed via VR at full scale and in real time for a new design feedback experience. Through this method, the designer is able to virtually position him/herself among the museum installation visitors in order to observe their actual behaviors in context and iteratively make modifications instantaneously. In essence, the designer and user effectively share the same prototypical design space in different realities. Experimental deployment and preliminary results of the shared reality workflow demonstrate the viability of the method for the museum installation case study and for future interactive architectural design applications.

Design Research Team:

- Bess Krietemeyer

- Amber Bartosh

- Lorne Covington/NOIRFLUX